The status quo of the software lifecycle was that changes that were required on the server side were developed offshore and then the skeleton JSP files were provided to the onshore web team who would apply the styling (CSS) and front end validation (Javascript). These JSP files were managed and edited within the CMS which resulted in content editors having to know technologies such as HTML, CSS and JSP. Consequently, the web team were burdened with managing the content for the live website, usually at very short notice. Furthermore, the packaging of the server side, CMS and additional applications were bundled as one monolithic Java Enterprise Archive (EAR) distribution and deployed manually on application servers. Testing was also completely manual and although there were a number of test environments, each was configured slightly differently and not representative of the production environment. The entire deployment process was very labour intensive and consequently release into production (performed by a separate production support team) was a very exhausting and painful experience for those involved. Releases had to be scheduled every two weeks and performed in the early hours of the morning with a number of people on-call to provide support.

Instead of reusing any of the existing codebase, it was decided to start from the ground-up using the a new CMS as the platform to develop the new website that would serve multiple channels and also facilitate the marketing team to manage its content.

The business was also undergoing a transition from a traditional 'waterfall' method of development to using 'agile' processes. Behaviour Driven Development (BDD) was employed to capture and refine requirements.

There were also a number of technologies and tools adopted to deliver enhance productivity and deliver value. We decided to abandon the heavyweight enterprise application server as there was no need for the additional features that it provided, and use the more lightweight Tomcat servlet container. Jira [1] was used to capture requirements as stories, to raise issues and defects, and generally plan and track the project. Confluence [2] was adopted for documentation purposes and as a collaboration tool to discuss designs. Bamboo [3] was used as the continuous integration and build tool and all new source code was required to have unit tests. Functional and acceptance tests were developed using Cucumber [4] and Ruby [5] to create an automated regression testing suite.

Bamboo was configured to monitor every time code was committed to version control and trigger a build and test. With third-party plugins such as Sonar, the team was provided with visibility of test coverage and code quality. Using Bamboo to perform deployments into all environments also provided confidence in the deployment process so that there were no surprises during release into production. As the acceptance tests were also built and executed by Bamboo, it was possible to trigger them from Bamboo after a deployment using Bamboo's REST interface.

Bamboo deployment plans allow software artefacts created during the Bamboo build plans to be deployed into a given environment.

|

| Bamboo Deployment Plans |

|

| Bamboo Deployment Tasks |

|

| Bamboo Deployment Plan Variables |

|

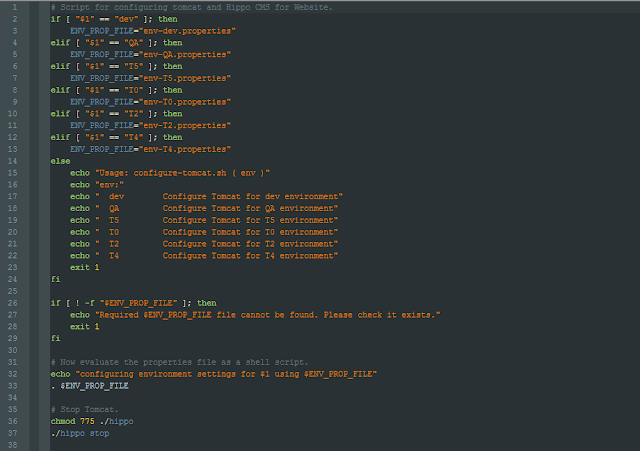

| Deployment Script |

|

| Environment Properties |

Bamboo now simply needs to invoke this script as shown below.

|

| Invoke Deployment Script |

curl --user $bamboo_smoke_test_user:$bamboo_smoke_test_password -X POST http://galbamboo:8085/rest/api/latest/queue/CMS-SMK?bamboo.variable.CMS_ENV=qa

References:

[1] https://www.atlassian.com/software/jira/

[2] https://www.atlassian.com/software/confluence/

[3] https://www.atlassian.com/software/bamboo/

[4] https://cucumber.io/

[5] https://www.ruby-lang.org/en/